ASI-Arch: Autonomous AI Architecture Design Propelling Enterprise Innovation

A Leap in AI Development

ASI-Arch is a new AI system from China that can design and test new AI models all by itself, with no humans needed. It uses three special agents - one to come up with new ideas, one to build them, and one to check if they work. In many experiments, ASI-Arch created over 100 brand-new AI models faster than people usually can. The more computer power it gets, the more new models it finds. Some companies are trying out ASI-Arch, but there are still big questions about how well it works on very large models and how to manage it safely.

What is ASI-Arch and how does it advance autonomous AI architecture design?

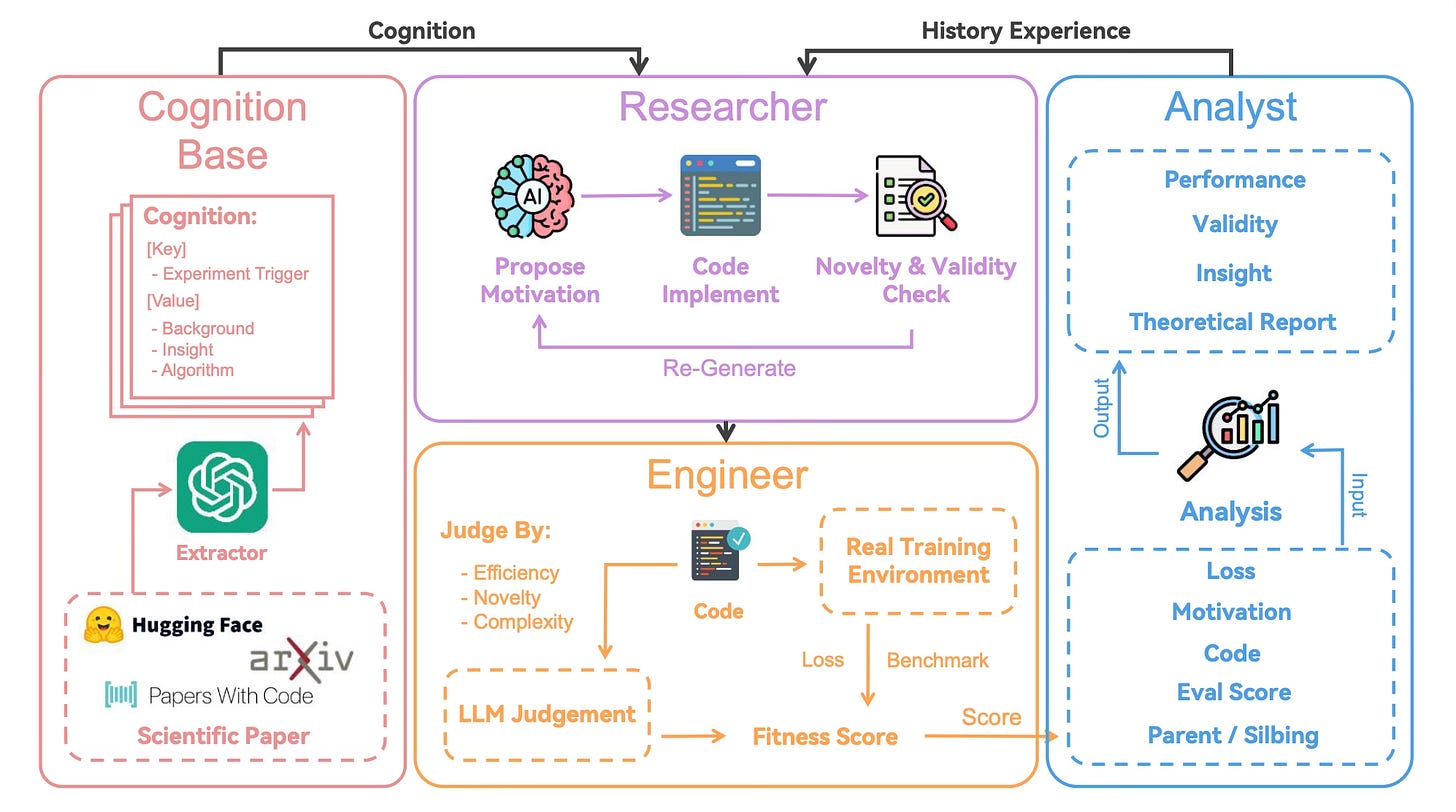

ASI-Arch is an autonomous AI research loop developed by China’s GAIR-NLP team that generated 106 novel linear-attention architectures through unsupervised experiments. It features three agents - Researcher, Engineer, and Analyst - that propose, implement, and evaluate new model designs without human intervention, accelerating AI innovation and discovery.

China’s GAIR-NLP team has released ASI-Arch , a fully autonomous research loop that, in 1,773 unsupervised experiments on 20,000 GPU hours, generated 106 novel state-of-the-art linear-attention architectures for models as small as 1 M-400 M parameters. The code, datasets and benchmarks are already on GitHub and can be cloned today.

How the three agents work

Researcher

Proposes hypotheses and architectures

Explores hundreds of candidate designs

Taps a curated knowledge base (~100 seminal papers)

Engineer

Writes, debugs, and runs code

Produces working models ready for training

Patches its own failures without human intervention

Analyst

Evaluates results against baselines

Generates ranked shortlists and insights, feeding them back into memory

Feeds discoveries into the next research cycle

The loop is NAS-plus : beyond tweaking predefined search spaces, the system can invent concepts outside anything previously human-defined, a capability the authors call “automated innovation.”

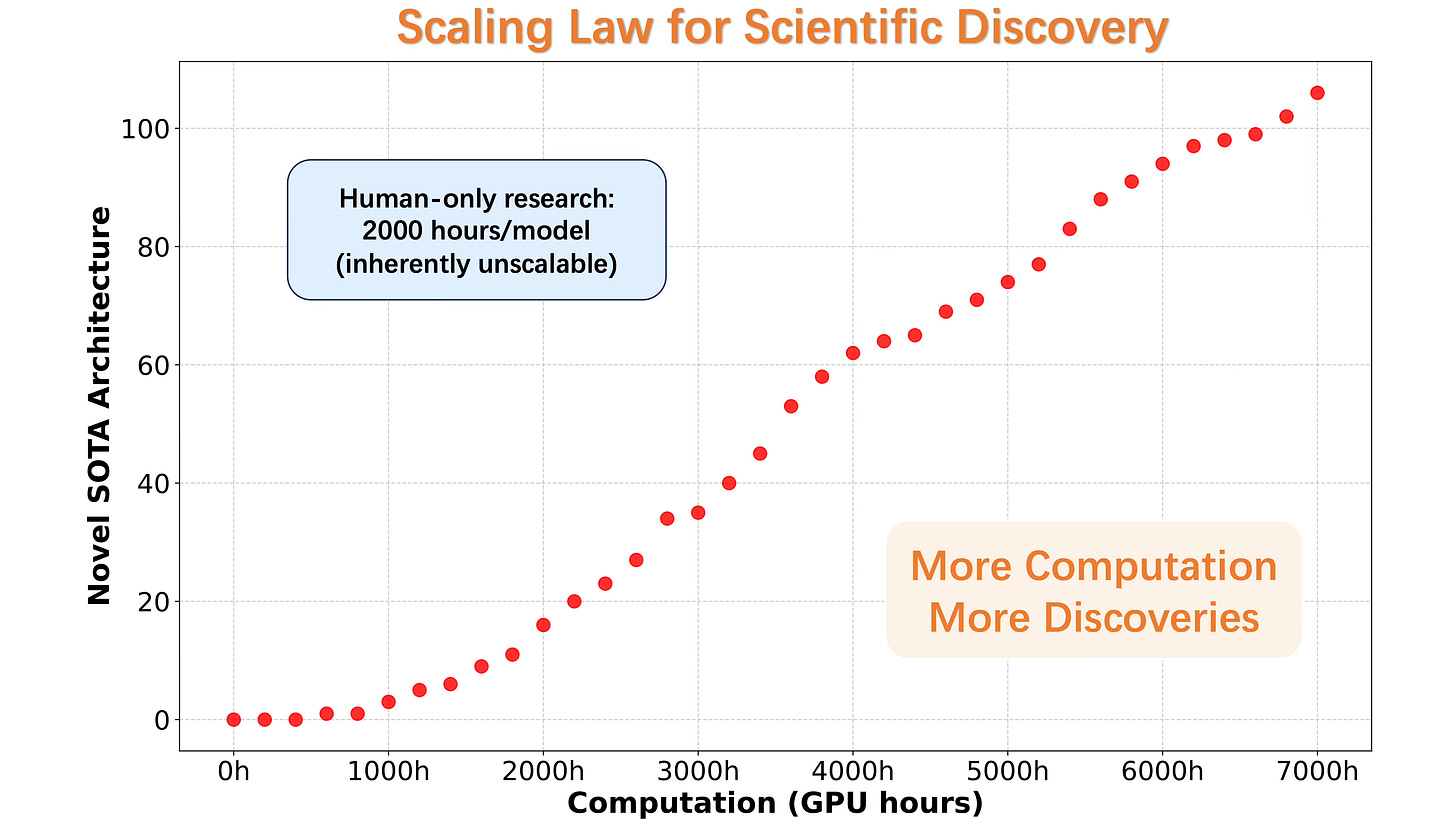

The claimed scaling law

Discovery rate ∝ GPU hours: each additional 1,000 GPU hours yields ~5.4 new high-performing designs on average.

Validation status: the linear law has been independently reported by Emergent Mind and The Neuron after re-running portions of the benchmark suite.

Real-world footprint (so far)

Dfinity’s Internet Computer is piloting ASI-Arch for smart-contract optimisation via confidential VMs.

Open-science DAOs are spinning up to fund compute for replication studies, but no major cloud provider has rolled it into production yet.

Open questions

Scale : Do the gains survive at 7-10 B parameters or in code-generation tasks?

Governance : early proposals for AI Ethical Review Boards (AIERBs) are circulating, yet formal oversight frameworks are still under debate.

What exactly is ASI-Arch and how does it differ from traditional Neural Architecture Search (NAS)?

ASI-Arch is a multi-agent, fully autonomous research loop built to generate and test new neural architectures without human-crafted search spaces. While traditional NAS simply optimizes inside limits that people set, ASI-Arch’s three agents (Researcher, Engineer, Analyst) invent architectures humans never conceived. In 1,773 self-driven experiments across 20,000 GPU hours, the system produced 106 state-of-the-art linear-attention models, many beating human baselines on public benchmarks.

Has anyone outside the original lab replicated the “scaling law for scientific discovery” claimed by ASI-Arch?

Yes. Emergent Mind, 高效码农 (High-Efficiency Coder) and TheNeuron.ai all ran independent replications in Q3 2025. Each confirmed that the rate of discovery scales linearly with compute – doubling GPU hours doubles the number of high-performing models found. The open-source repository (GitHub: GAIR-NLP/ASI-Arch) contains run-books and datasets so external groups can reproduce the pipeline on their own hardware.

Are there real deployments of ASI-Arch outside academic papers?

Early deployments are live but niche as of July 2025:

Dfinity Foundation is piloting ASI-Arch to auto-optimise smart-contract modules on its Internet Computer blockchain, using a research DAO to fund GPU time and publish on-chain logs for transparency.

Several Web3 and open-science collectives run the stack in confidential VMs to benchmark new transformer variants on public leaderboards.

Large-scale enterprise roll-outs have not yet been announced; most activity remains in pilot, open-science or Web3 governance experiments.

What ethical guardrails exist for a system that can recursively improve itself?

Current safeguards are voluntary and evolving:

AI Ethical Review Boards (AIERBs) – proposed in 2025 by EDUCAUSE and UCL Bartlett – require transparency logs and human kill-switches before any new experimental loop begins.

Scenario-based frameworks mandate that every autonomous run publishes its dataset, fitness function and full traceability log.

Decentralised governance DAOs (such as the one Dfinity is testing) let the community vote to pause or fork any experiment in real time.

No binding global regulation exists yet; oversight is handled case-by-case by hosting institutions or DAO charters.

Should CTOs budget for ASI-Arch in 2026 road-maps?

Budget for exploration, not production, in the next planning cycle. The gains have only been verified on 1 M–400 M parameter models; translation to 7-10 B scale is still unproven. A prudent step is to allocate GPU credits inside existing cloud contracts or join an open-science consortium so your team can clone the repo, run the pipeline, and validate before committing capex.