How to Classify Articles Using Python and ChatGPT in 5 Minutes

A simple guide for using ChatGPT programmatically in real-world tasks

The rise of language models like ChatGPT has opened up a new frontier in content generation and natural language processing (NLP) tasks. Beyond simple text generation, ChatGPT can perform tasks ranging from summarizing lengthy articles to classifying them based on content. If you're interested in automating content classification tasks, this article is for you. Below, we’ll walk you through a Python program that leverages ChatGPT to classify articles and add meta-data like tags, summary, countries, and categories.

ChatGPT's abilities extend far beyond conversation. By framing your query as a prompt, you can turn this conversational model into a specialized worker that performs tasks like summarization and classification. You can then parse its output into a machine-readable format like JSON, which can be ingested by databases or other applications. Utilizing my library `easygpt`, interacting with ChatGPT becomes even more straightforward, offering intuitive methods to perform tasks.

Today I want to show you how easy it is to clasify articles using ChatGPT and several lines of code. Since my main data keeping backend is Airtable I will work with it but you understand it is not mandatory.

So the task we will be performing:

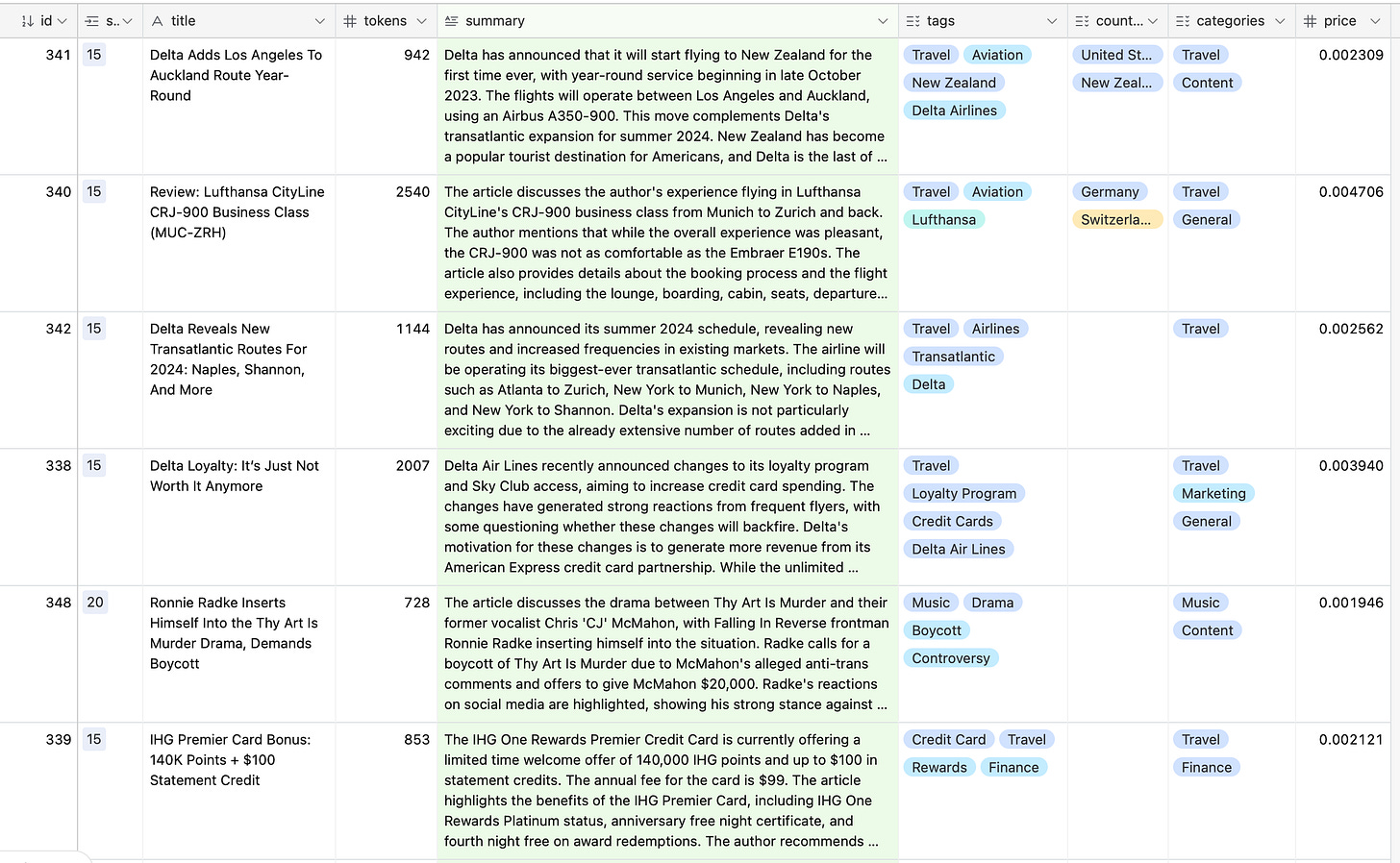

We have a bunch of text articles uploaded to Airtable. We need to classify each of them. Classification involves creating a concise summary of the article to simplify future work, generating relevant tags, assigning categories of interest, and identifying any mentioned countries.

Crafting the prompt - the most important part

The prompt is crucial for your interaction with ChatGPT; the effectiveness of the task execution depends on it. In this instance, it will be a system message that meticulously instructs the model on the desired output.

Here is a meticulously designed prompt that I've created and field-tested for performance:

You are AI parser who parses content to classify it. You always respond with JSON. Your aim is to make summary for the article which is 4-5 sentences long, 4 tags for the article as a list and countries if any mentioned (if cities mentioned and you know the countries then add countries of the city). Also select if any categories can apply to this article from this limited list:

travel, motivation, religion, music, tv, health, food, catering, tech, marketing, ai, content, general

select up to 3, use only this categories.

Here is an example of your response:

{

"summary": "The article delves into the booming market for plant-based food alternatives in Berlin, Germany. It discusses how technology like 3D printing is innovating meat substitutes, the health benefits of shifting to a plant-based diet, and why Berlin has become the hub for this growing industry. Local startups are attracting global investment, positioning Berlin as a leading city in sustainable food technology.",

"tags": ["Health", "Tech", "Food", "Sustainability"],

"countries": ["Germany"],

"categories": ["Health", "Tech", "Food"]

}

and one more example:

{

"summary": "The article explores the impact of Japanese minimalist design on contemporary marketing strategies. It highlights how the principle of 'Ma' (the space in between) can be leveraged in advertising for stronger emotional impact. Case studies from renowned brands like Apple and Uniqlo are dissected to demonstrate this influence. The article argues that adopting minimalist design leads to clearer messaging and greater consumer engagement.",

"tags": ["Marketing", "Design", "Culture", "Consumer Engagement"],

"countries": ["Japan", "United States"],

"categories": ["Marketing", "Content"]

}

Pretty huge one, yes? It's essential for ChatGPT to respond consistently. Providing multiple examples in the system message serves a three-fold purpose:

Enhanced Reliability: Providing a more nuanced set of examples minimizes ambiguity, rendering the model's output more reliable.

Contextual Richness: A range of examples allows ChatGPT to understand the varying scope of your requirements.

Structural Fidelity: Multiple examples instruct the model on the precise format you desire, minimizing the chances of receiving an improperly formatted JSON response.

So let’s move to the coding part.

Creating classification Python program

We will utilize 4 libraries, the only important one is openai, you can rewrite my code to do classification without using all others.

PyAirtable: The Python wrapper for Airtable's API.

OpenAI: The official package for interfacing with OpenAI's GPT-3 API.

EasyGPT: My custom-built wrapper that simplifies interactions with ChatGPT.

TikToken: The token counter from OpenAI (required for EasyGPT)

Core Loop for Article Classification

Set some constants and initialization of EasyGPT instance

# Constants

TOKENS_FOR_16K = 3300 # switch to 16k model in this case

TOKENS_TO_CANCEL = 10000 # skip classification if article too big

CLASSIFY_PROMPT = "Classify the following article:\n\n[article]"

CLASSIFY_SYSTEM_MESSAGE = """ Insert my system message prompt ... """

# Initialize EasyGPT

gpt = EasyGPT(openai, "gpt-3.5-turbo")

Retrieve articles that lack a summary from Airtable and start the loop.

# Fetch news without summary

news_to_classify = table_news.all(formula="AND({summary}='')")

# Loop to classify each article

for article in news_to_classify:Gauge if the article's length requires a switch to a 16k model.

content = article["fields"]["content"]

tokens = gpt.tokens_in_string(content)

if tokens > TOKENS_FOR_16K:

gpt = EasyGPT(openai, "gpt-3.5-turbo-16k") logging.info("Using 16k model")This section dynamically switches to a 16k token model if the number of tokens in the article surpasses a predetermined threshold (TOKENS_FOR_16K, which is set to 3300). The switch is essential for accommodating longer texts without compromising data integrity.

Classify the article using ChatGPT.

prompt = CLASSIFY_PROMPT.replace("[article]", content) gpt.set_system_message(CLASSIFY_SYSTEM_MESSAGE)

(answer, input_price, output_price) = gpt.ask(prompt)The article is classified by sending a prompt to the GPT-3 engine. The prompt is created by replacing a placeholder in CLASSIFY_PROMPT with the actual article content. The gpt.ask() function is then used to get the classification, along with the token costs (input_price and output_price).

Validate the JSON output and handle errors, if any.

try:

parsed_json = json.loads(answer)

price = input_price + output_price

if all(key in parsed_json for key in ["summary", "tags", "categories"]):

calassification = {

"summary": parsed_json["summary"],

"tags": ", ".join(parsed_json["tags"]),

"categories": ", ".join(parsed_json["categories"]),

"tokens": tokens,

"price": price

}

# check if we have countries in the response

if "countries" in parsed_json:

print("Countries: " + ", ".join(parsed_json["countries"]))

if len(parsed_json["countries"]) > 0:

calassification["countries"] = ", ".join(parsed_json["countries"])

print("Adding countries: " + calassification["countries"])

logging.info("JSON valid, saving to Airtable")

table_news.update(article["id"], calassification, typecast=True)

else:

logging.error("JSON missing required fields, skipping")

table_news.update(article["id"], {"errors": article["fields"].get("errors", 0) + 1})

except json.JSONDecodeError:

logging.error("Invalid JSON, skipping")

table_news.update(article["id"], {"errors": article["fields"].get("errors", 0) + 1})Here, the answer returned from the GPT-3 engine is loaded into a Python dictionary (parsed_json). The script then checks whether all required fields ("summary", "tags", "categories") are present. If so, the data is deemed valid and is saved to Airtable. Otherwise, an error is logged, and the errors field in Airtable is incremented.

Additionally, it checks for the presence of the countries key and adds it to the Airtable if it exists. This is an optional field, which means that its absence doesn't make the JSON invalid.

You can find complete code on my GitHub.

So it works…

By leveraging ChatGPT’s potent capabilities, this Python script functions as an invaluable asset for automating your content management systems. In a mere 10 minutes, I had successfully classified 255 articles at a cost of just $0.77.

Advanced NLP models like ChatGPT have democratized content automation, making it an accessible and highly efficient process. Whether you operate independently or within a larger framework, integrating ChatGPT into your content management pipeline offers an unprecedented boost in productivity, coupled with consistent and high-quality outputs.