OpenAI's Evolution: Introducing Fine-Tuning for GPT 3.5 Models

Achieving Task-Specific Mastery with the Latest Customization Features.

OpenAI has released the fine-tuning feature for GPT-3.5 Turbo, and it will be available for GPT-4 in the fall. This update allows developers to customize models so that they better handle specific tasks and can be easily scaled. Initial tests showed that the finely-tuned version of GPT-3.5 Turbo can compete with or even surpass the basic capabilities of GPT-4 in narrow tasks. The data sent to and from the fine-tuning API belongs to the client and is not used by OpenAI or other organizations to train other models.

Here are a few use cases for fine-tuning provided by OpenAI:

Improved Controllability: Allows the model to better follow instructions, such as responding briefly or always reacting in a certain tone or language.

More Reliable Output Formatting: For tasks that require a specific format for the answer, fine-tuning enhances the model's ability to uniformly format them.

Individual Tone: Allows for precise adjustment of the qualitative feel of the model's output to better match the brand's voice.

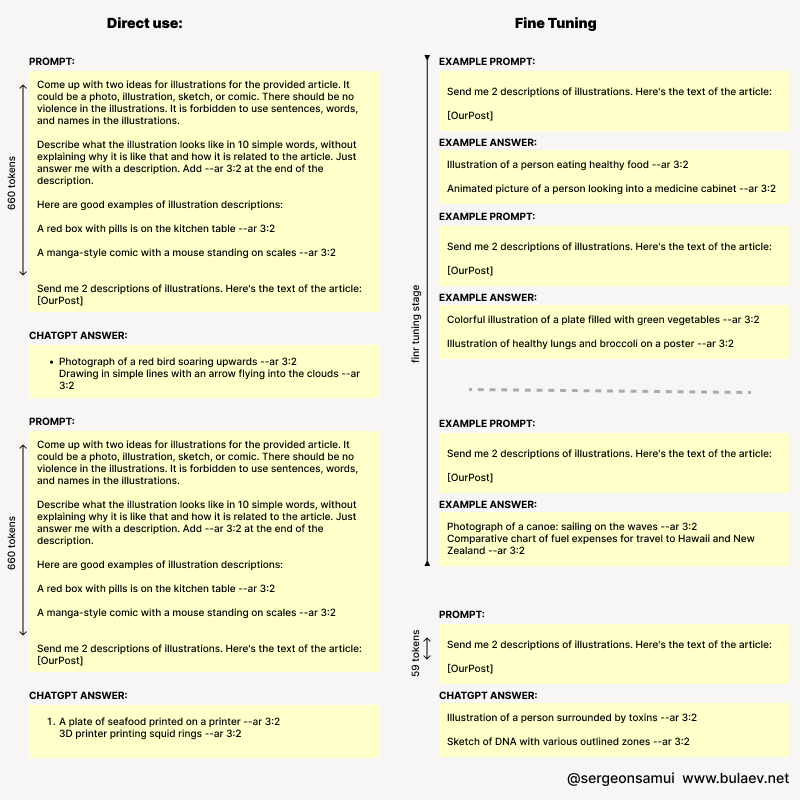

Fine-tuning also enables users to significantly reduce the size of queries, providing comparable quality. The contextual window size when working with fine-tuning has doubled - up to 4k tokens. According to OpenAI, the first users were able to reduce the size of queries by 90%.

Fine-tuning is most effective when combined with other methods, such as query engineering, information extraction, and function calling. More information can be found in the fine-tuning guide.

How does fine-tuning work? We create a set of prompt examples and expected answers to them. We upload and "compile" them, and then we can use the fine-tuned model in the same way as the regular one. We spend money on "retraining," but save on future use. Right now, this can be done through the API, but a UI interface is expected soon.

For example, I often ask the model to respond to me in JSON format, and I see how high the variability of the answers is. Providing specific examples of answers in the prompt (increasing its size) improves stability, though not radically. The more examples, the higher the cost. Another personal use case for me is generating queries for MidJourney - I will definitely create a model specifically tailored for this.