The Dead Internet Theory: When Algorithms Replace Humans

How the internet is quietly transforming into a network of bots and algorithmic interactions

The Dead Internet Theory is a concept claiming that a large portion of the modern internet consists of automatically generated content and bots instead of real people. What recently seemed like a paranoid conspiracy theory is now being confirmed by scientific research and statistics from major companies.

Origins and Evolution

The theory first gained attention in 2021 after a viral forum post titled “Dead Internet Theory: Most Of The Internet Is Fake.” Initially considered a conspiracy theory, it has gained new validation with the development of AI technologies and has shifted into the category of predictions coming true before our eyes.

Interestingly, the roots of this idea can be traced back to the early 2010s, when researchers began noticing the growth of automated traffic. But it was the explosive development of generative AI in 2022-2024 that turned the theory into a frightening reality.

The Stakes Are High

Understanding the scale of internet automation is critically important for information hygiene and preserving authentic human interactions. If content and communication are increasingly generated by algorithms, this fundamentally changes the nature of social connections and the information landscape.

The numbers don’t lie: According to Imperva’s 2024 data, automated traffic jumped from 42.3% in 2021 to a record 49.6% in 2023. For the first time in history, bots generate more internet traffic than living humans. Projections show that automated traffic could exceed 50% by 2028.

Key Features of the Modern “Dead” Internet

Automated Traffic

According to 2023 data, approximately 50% of web traffic is generated not by people, but by automated programs. These aren’t just search bots - they’re sophisticated systems that mimic human behavior with frightening accuracy.

Habsburg Syndrome (Habsburg AI)

A phenomenon where AI trains on data created by other AI, leading to content quality degradation with each generation of models. The term was coined by researcher Jathan Sadowski, drawing a parallel with the degeneration of the Habsburg dynasty due to inbreeding.

Scientific confirmation: In 2024, the journal Nature published groundbreaking research by Ilia Shumailov and colleagues showing that AI models “collapse” when trained on data generated by other AI systems. Models gradually lose information about the real world, and “tails of the original content distribution disappear irreversibly.”

Researchers from Rice University discovered an even more troubling pattern: after five generations of training on their own output data, AI models demonstrate serious quality degradation. This “inbreeding” leads to loss of diversity and accuracy.

Artificial Popularity

Using bots to artificially inflate engagement metrics (likes, comments, reposts). This was particularly evident on Facebook in 2024, where AI-generated images dubbed “AI slop” began going viral. Fake images of flight attendants, children with artwork, and various “Shrimp Jesus” depictions gathered thousands of likes and shares.

Automated Curation

Algorithmic systems that determine what content will be shown to users are becoming increasingly aggressive in promoting AI-generated content.

Real-World Implementation

Major platforms like Meta (Facebook, Instagram) are implementing more AI features. For example, in 2025, Instagram began testing a “Write with Meta AI” function that analyzes photos and suggests ready-made comments. Platform X (formerly Twitter) uses user content to train its AI assistant Grok.

As a result, users increasingly interact with automatically generated content, sometimes without realizing it. Social networks are transforming from platforms for human communication into networks of algorithmic and bot interactions.

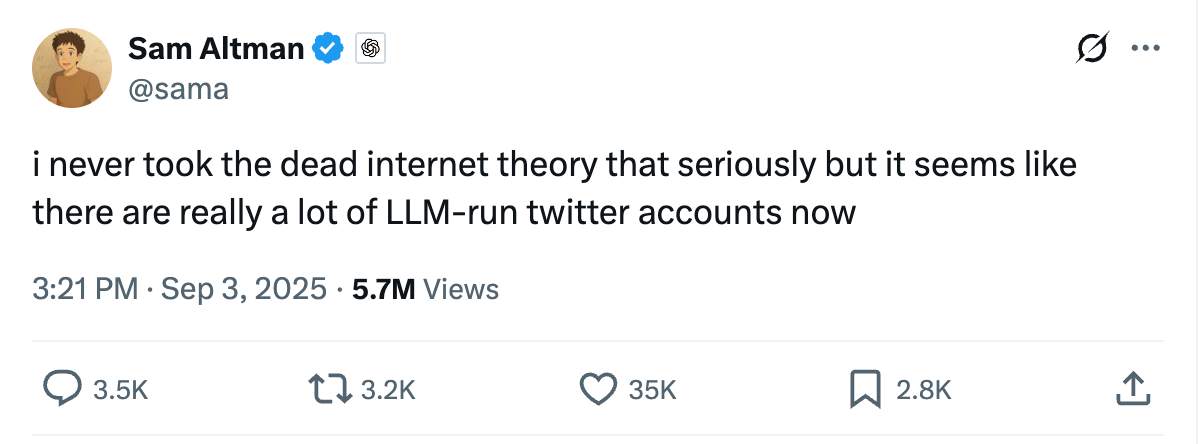

Industry voice: Even Sam Altman, CEO of OpenAI, expressed concerns about Dead Internet Theory, particularly regarding AI model collapse when training on self-generated data.

Pitfalls and Threats

Information Quality Degradation

When AI begins training on content created by other AI, a “telephone game” effect emerges - with each generation, information becomes more distorted and less connected to reality.

Loss of Human Context

Algorithms don’t understand the subtleties of human communication, cultural nuances, and emotional context. Their widespread use could lead to the impoverishment and standardization of communication.

Platform Enshittification

Writer Cory Doctorow describes platform evolution as a process of “enshittification,” where first they serve users well, then exploit users to attract business, and finally exploit business to maximize their own profits.

Industry Response

The problem is becoming so serious that major tech companies are starting to take action. For example, Cloudflare proposed in 2024 to limit bot access to websites and force them to pay for entry.

Academic Research 2024-2025

January 2025 was marked by the publication of a comprehensive academic survey “The Dead Internet Theory: A Survey on Artificial Interactions and the Future of Social Media” on ArXiv, which explores the origins, core claims, and implications of the theory.

The journal AI & Society published research in 2024 about artificial influencers and their connection to Dead Internet Theory, showing how the line between real and virtual personalities is blurring.

What This Means for the Future

We stand on the threshold of a fundamental transformation of the internet. Projections show that automated traffic could exceed 50% by 2028. This means that most of the content we interact with online will be created by machines for machines.

The question isn’t whether this will happen, but how we’ll adapt to it. Do we need new ways to verify human content? Should platforms be required to label AI-generated content? How do we preserve authentic human connections in a digital world?

The Broader Implications

The Dead Internet Theory raises profound questions about the nature of online reality and human agency in digital spaces. If our feeds, recommendations, and interactions are increasingly mediated by AI systems trained on AI-generated content, we risk creating a closed loop where human culture becomes increasingly divorced from digital culture.

This isn’t just about technology - it’s about the future of human communication, creativity, and connection. The internet was supposed to democratize information and bring people together. Instead, we might be witnessing its transformation into a hall of mirrors where algorithms talk to algorithms while humans become increasingly isolated spectators.

What to Read/Watch

Scientific Research:

Shumailov, I. et al. “AI models collapse when trained on recursively generated data” Nature (2024)

ArXiv preprint: “The curse of recursion: training on generated data makes models forget”

Rice University research on “Model Autophagy Disorder” (link TBD)

Media and Analysis:

The Atlantic: “Maybe You Missed It, but the Internet ‘Died’ Five Years Ago”

TIME Magazine: “Sam Altman Voices Concern Over Dead Internet Theory”

Popular Mechanics: “The Internet Will Be More Dead Than Alive Within 3 Years”

Cory Doctorow’s “The Enshittification of TikTok” on platform degradation

VICE: “’Dead Internet Theory’ Is Back Thanks to All of That AI Slop”

The Conversation: “The ‘dead internet theory’ makes eerie claims about an AI-run web”

The Dead Internet Theory isn’t just a technological trend - it’s a challenge to our understanding of what it means to be human in the digital age. While we debate whether this threat is real, algorithms are already shaping our perception of the world.

Share your thoughts: Have you noticed signs of the “dead internet” in your online experience?

If this post was helpful, please like and share with colleagues. Subscribe to the newsletter to stay updated on artificial intelligence and digital trends.