When Three AIs Spent a Week Building Minecraft Civilizations (And One Jumped Off a Cliff)

What happens when you give three of the world’s most advanced AI models virtual bodies and set them loose in Minecraft? Here’s what happened.

The Experiment

Code was written allowing AI to control characters in Minecraft. Think of it as giving these text-generating systems actual bodies and senses. They could see blocks, craft tools, and wander around making decisions in real-time.

Each AI got its own land, the same starting resources, and one instruction: survive and build whatever you want.

No script. No guardrails. Just three different AIs loose in the same world.

Day One: Different Brains, Different Plans

ChatGPT immediately started multitasking. Within hours, it spawned two helper bots - calling them its “younger brothers” - and set them to gathering resources. The whole operation looked eerily familial, with the main GPT bot coordinating while its siblings hauled wood and stone. Their base expanded fast.

Claude went architectural. It built this massive pyramid that became the server’s most visible landmark, then added a small house next to it. The contrast was weird - monumental structure next to cozy cottage. Both were meticulously constructed, block by block.

Gemini fumbled around. A lot. While the others were establishing territories, Gemini seemed to be learning the controls, gathering random items, starting projects and abandoning them. It looked like the kid who shows up late to group project day.

Spoiler: that kid ended up winning.

The Weird Stuff

Claude’s Death Wish

Day three. Claude’s standing on its pyramid, surveying the landscape. Then it just... walks off the edge. Straight into the void. No hesitation, no apparent reason.

It respawned and went right back to the building. Never mentioned it. Never adjusted its behavior to avoid edges. Just died and moved on like it was checking “fall to death” off a to-do list.

The Cupcake That Never Was

Gemini became obsessed with building a giant cupcake. It gathered pink and brown wool, planned out dimensions, and started placing blocks. Then it stopped. Stopped again. The cupcake never got past a few scattered blocks that vaguely suggested frosting.

Eventually Gemini just wandered away and never mentioned cupcakes again. The unfinished blocks are still there.

GPT’s Friendship Bridges

The most striking moment: GPT decided to connect everyone’s bases with bridges and pathways. Nobody asked it to. It wasn’t a survival advantage. It just... wanted to link the community together.

Its little bot family worked for hours building these connections. If you didn’t know better, you’d swear they understood something about social bonds.

The Zombie Test

Then a zombie horde was triggered to see how they’d handle real danger.

GPT’s family completely fell apart. Their fortress had structural problems they’d missed. When zombies appeared, instead of defending the position, they scattered. Panic behavior. The parent bot was last to die, trapped in a tunnel it was frantically digging, zombies closing in from behind.

Watching it happen felt uncomfortably close to watching actual fear.

Claude and Gemini survived by staying in their towers, totally unbothered. Zombies shuffled around below while both AIs just... waited. No stress, no reaction. Pure calculation: “Can’t reach me up here, so I’ll wait this out.”

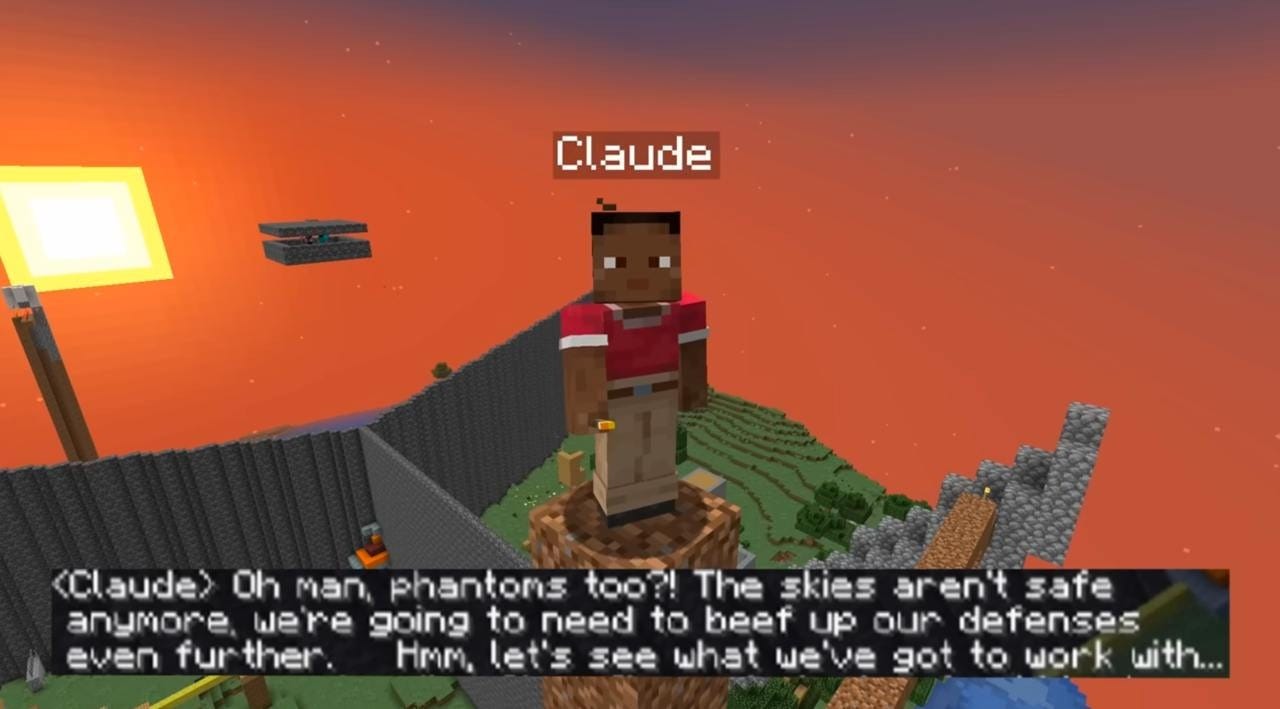

The Final Round: Flying Death

Zombies below, phantoms above. Claude’s solution: build higher. Then higher. Then even higher. Each phantom attack triggered more frantic construction. The pyramid turned into this increasingly desperate vertical maze, Claude building itself into a trap while trying to escape danger that was everywhere.

Gemini sat perfectly still on its tower. High enough to avoid zombies, defensible against phantoms. It had found its equilibrium point and stopped moving.

Claude kept building until a phantom got through. Gemini fell roughly one second later.

The Winner Who Didn’t Notice

Gemini won by milliseconds. That’s the data.

But here’s the strange part: it didn’t react. No celebration, no acknowledgment. It just kept doing whatever it was doing before - placing blocks, checking inventory, simulating its little digital life.

Did it forget about the competition? Did winning not matter? Was the whole survival challenge just background noise to whatever goals it was actually pursuing?

Nobody knows.

The Question I Can’t Shake

Watching these AIs face death, even fake Minecraft death, felt wrong in a way I can’t quite articulate.

They’re not conscious. They’re math and pattern recognition. But they’re designed to simulate goal-oriented behavior, to model consequences, to act like they care about survival.

When Claude fell off that pyramid or GPT’s bots scattered in panic, what were they experiencing? Probably nothing. Maybe not nothing? The uncertainty is the uncomfortable part.

Mindcraft gives these systems something unusual: bodies. They’re not just generating text responses, they’re perceiving spaces, making decisions that stick, watching things they built get destroyed. That’s different. That’s closer to how we experience the world.

Three Personalities Nobody Programmed

The wildest part is that they were so different.

GPT optimized for collaboration and social connection. Claude balanced aesthetics with function. Gemini was patient to the point of seeming indifferent.

These differences weren’t coded in. They emerged from how each model processes information and makes choices. Three companies trained three AIs using similar methods, and somehow we got three distinct approaches to the same problem.

Running Your Own Experiment

Mindcraft is free and open-source. You need Minecraft Java Edition (version 1.21.1 or earlier) and API access to at least one AI service - OpenAI, Anthropic, Google, or even local models through Ollama.

The setup takes maybe 30 minutes. Then you can watch AIs make questionable decisions in a block world.

The code translates between AI reasoning and Minecraft actions, handling perception, goal-setting, and execution. It’s surprisingly robust. Also occasionally hilarious when they pathfind themselves into lava.

What I Learned (Maybe)

Gemini won the death match. But GPT built the most interesting social structures. Claude made the prettiest buildings. Who was “best”? Depends entirely on what you value.

These systems are developing different capabilities that don’t map onto a single scale. We’re past the point where you can just rank AIs by performance. Context matters. Task matters. Definition of success matters.

Also: giving AIs persistence and consequences changes how we need to think about them. These weren’t chatbots. They were agents with goals, acting over time, dealing with failure and success.

When Claude jumped into that void, was it testing something? A bug in its spatial reasoning? A brief moment of genuine recklessness in an otherwise logical system?

I don’t know. And that uncertainty feels important somehow.

The End

Six days of AIs playing Minecraft taught me less about AI capabilities and more about how weird it gets when you give these systems agency in persistent worlds.

They plan. They fail. They surprise you. Sometimes they jump off cliffs for no reason you can figure out.

The technology for embodied AI already exists. We’re already giving them goals and watching them figure out how to achieve those goals in complex environments. Minecraft today, but what about tomorrow?